ChatCompletion 400 in Azure OpenAI – Debugging the Black Box

TLDR: If you’re getting a 400 Bad Request error with Azure OpenAI’s ChatCompletion API, and there’s no useful error message, try adding custom logging and retry policies to your client setup.

Here’s a quick fix using a custom PipelinePolicy to log detailed request/response information:

Custom Logging Middleware for Better Error Visibility

public class CustomLoggingPolicy : PipelinePolicy

{

public override void Process(PipelineMessage message, IReadOnlyList<PipelinePolicy> pipeline, int currentIndex)

{

ProcessNext(message, pipeline, currentIndex);

}

public override async ValueTask ProcessAsync(PipelineMessage message, IReadOnlyList<PipelinePolicy> pipeline, int currentIndex)

{

Console.WriteLine($"Sending Request to {message.Request.Uri}");

await ProcessNextAsync(message, pipeline, currentIndex);

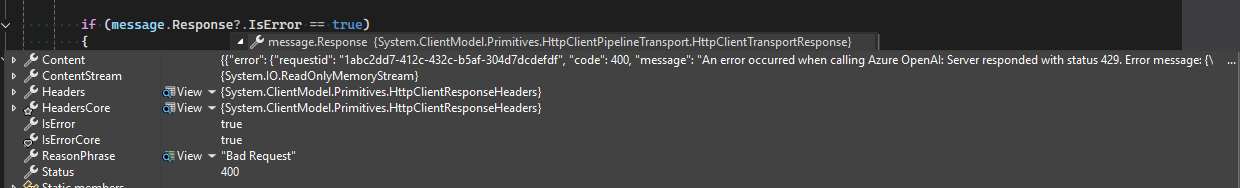

if (message.Response?.IsError == true)

{

Console.WriteLine($"Request to {message.Request.Uri}, " +

$"ReasonPhrase: {message.Response.ReasonPhrase} " +

$"ResponseContent: {message.Response.Content}");

}

}

}

Custom Retry Policy for Rate Limiting

public class CustomClientRetryPolicy : ClientRetryPolicy

{

protected override async ValueTask<bool> ShouldRetryAsync(PipelineMessage message, Exception? exception)

{

if (message.Response.Status == (int)HttpStatusCode.TooManyRequests)

{

return false;

}

return await base.ShouldRetryAsync(message, exception);

}

}

Apply Policies to AzureOpenAIClientOptions

AzureOpenAIClientOptions clientOptions = new();

clientOptions.AddPolicy(new CustomLoggingPolicy(), PipelinePosition.BeforeTransport);

clientOptions.RetryPolicy = new CustomClientRetryPolicy();

AzureOpenAIClient azureOpenAiClient = new(

new Uri("https://your-openai-instance.openai.azure.com/"),

new DefaultAzureCredential(),

clientOptions

);

This should help surface useful error messages and prevent excessive retries when encountering rate limits.

References:

- GitHub Issue: Azure SDK .NET #47280

- User Credit: jaliyaudagedara’s comment

The Journey: Debugging OpenAI 400 Errors

My Specific Issue: Rate Limits (But I Tried Everything First)

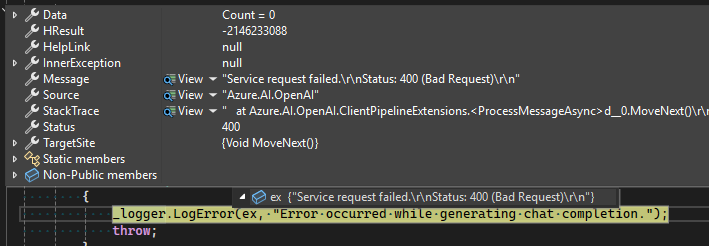

When I first encountered the 400 Bad Request error in Azure OpenAI’s ChatCompletion API, I had no clue what was wrong. The response contained zero useful details, which made debugging frustrating.

At first, I considered multiple possible causes and tested each one before finally discovering that rate limits were the real issue. Here’s how I approached debugging:

- Checked API Key and Authentication ✅

- Used

ApiKeyCredentialto ensure I wasn’t using incorrect credentials. - Verified that my deployment name matched what I set up in Azure OpenAI.

- Used

- Validated Request Payload ✅

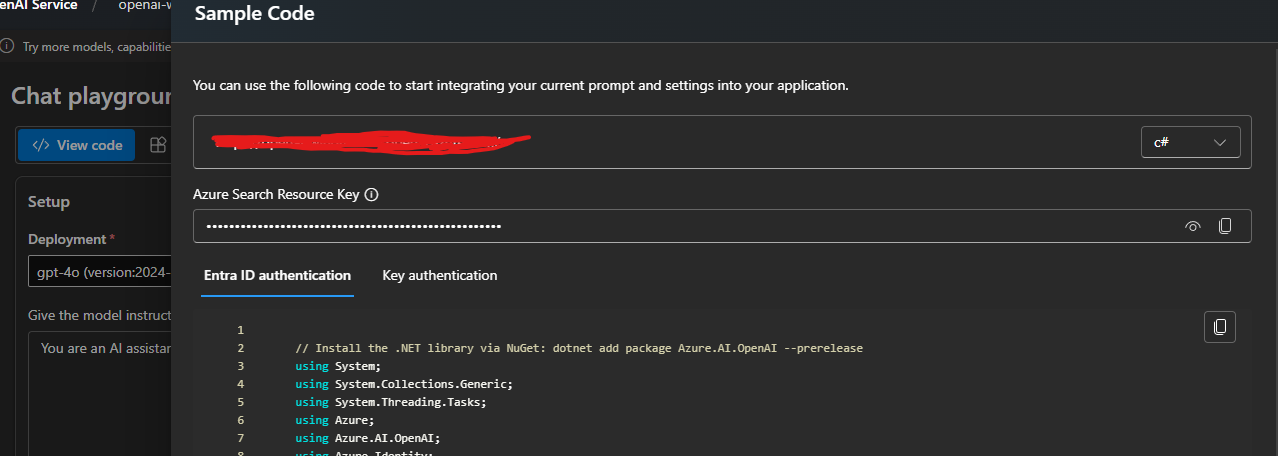

- Manually tested different parameters (

MaxTokens,Temperature,TopP) to see if invalid values were causing the issue. - Used Azure’s AI Studio (

ai.azure.com) sample code to compare it to my code.

- Manually tested different parameters (

- Suspected Rate Limits (Bingo! 🎯)

- After exhausting all other options, I started logging request details.

- Turns out, Azure sometimes returns 400 instead of 429 for rate limits!

- Implementing custom logging and retry policies (as shown earlier) finally helped identify the root cause.

Debugging Journey: Searching for the Solution

I searched through the README, official documentation, and wiki articles, but they didn’t provide a clear answer. Eventually, I stumbled upon a GitHub issue that described symptoms similar to mine.

That’s when I found this comment, which proposed adding a custom logging policy to reveal more details about the API response. This helped me confirm the issue was related to rate limiting.

Lessons Learned

- Always log request/response details to debug issues.

- Rate limits can return misleading errors—watch for

400that might be429. - Azure OpenAI SDK lacks detailed error handling—adding a Custom Logging Policy helps.

- Check AI Studio (

ai.azure.com) for payload validation before coding.

Future Improvements

- Automate rate limit handling with dynamic backoff instead of outright failing on 429 errors.

- Improve error messages in SDK (maybe even contribute to the official repo 🤔).

- Experiment with batching requests to reduce token consumption.

Final Thoughts

Debugging Azure OpenAI’s 400 errors was frustrating, but this journey reinforced a crucial lesson:

📌 When APIs don’t give you useful error messages, build your own debugging tools.

If you’re struggling with similar issues, try implementing a Custom Logging & Retry Policy—it made a world of difference for me.

If you’ve faced weird OpenAI API issues, I’d love to hear about them.

🔗 Related Links

- GitHub Issue Discussion: Azure SDK .NET #47280

- Official Azure OpenAI Docs: Microsoft Learn

What Do You Think?

Let’s chat! Have you run into weird OpenAI errors? What debugging tricks worked for you? 🚀